Brief Overview

- India abstained from signing a military AI governance pledge at the REAIM Summit.

- The decision preceded India’s hosting of the AI Impact Summit in New Delhi.

- Military AI governance remains peripheral within broader global AI regulatory debates.

- Only 35 of 85 countries signed the ‘Pathways to Action’ declaration.

- Participation declined significantly from 60 signatories at the previous summit.

Challenges

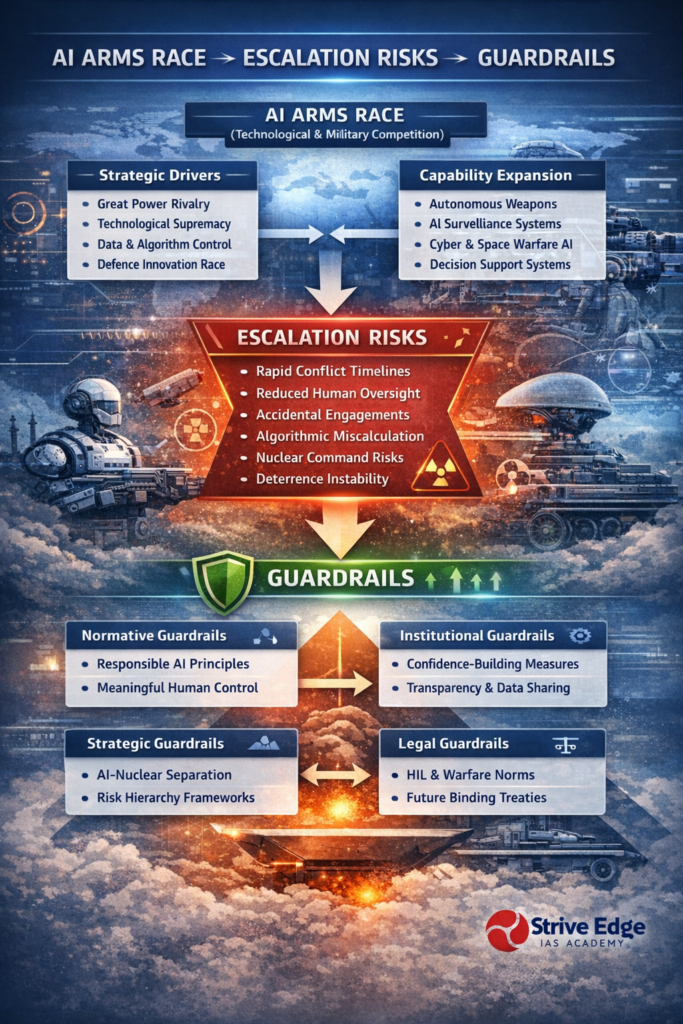

- Military AI’s dual-use nature blurs civilian innovation and defence weaponisation pathways.

- Verification of compliance becomes difficult as civilian R&D can support military applications.

- Expanding AI roles in logistics, surveillance, and combat enhances perceived battlefield advantages.

- States with heavy AI investments resist regulatory commitments limiting technological growth.

- Lethal Autonomous Weapons Systems (LAWS) represent the most controversial military AI application.

- Autonomous targeting raises ethical concerns over machine-led life-and-death decisions.

- Global governance remains weak, with UN deliberations yielding no binding outcomes.

- Absence of an internationally accepted definition of LAWS obstructs treaty formation.

- Technologically advanced states favour flexible definitions preserving operational freedom.

- Less-capable states advocate restrictive definitions enabling stronger regulation.

India’s Stance

- India balances technological ambitions with pressing national security compulsions.

- It supports principles of responsible military AI deployment.

- However, India abstained from Korea Blueprint and REAIM governance declarations. These declarations considers legally binding frameworks on LAWS premature.

- Regional security threats shape India’s cautious regulatory posture.

Way Forward

- India should advocate non-binding governance frameworks rooted in accountability principles.

- Autonomous AI systems must remain separated from nuclear command infrastructures.

- Voluntary confidence-building mechanisms can promote transparency in military AI development.

- A globally accepted risk hierarchy should classify military AI applications.

- Norm-building today can evolve into binding treaties as deployment expands.